Research

Hokkaido University

Human-Computer Interaction lab

We are focusing on a wide range of research topics, including interactive social robot and agent research (Human-Robot Interaction and Human-Agent Interaction), XR research such as Virtual/Augmented/Mixed Reality (VR/AR/MR), user interface technology development as applied research of Artificial Intelligence (AI) and Machine learning (ML) technique, and user experience and interaction design, which are essential for modern product design and development.

Human-Agent Interaction (HAI)

Human-Robot Interaction (HRI)

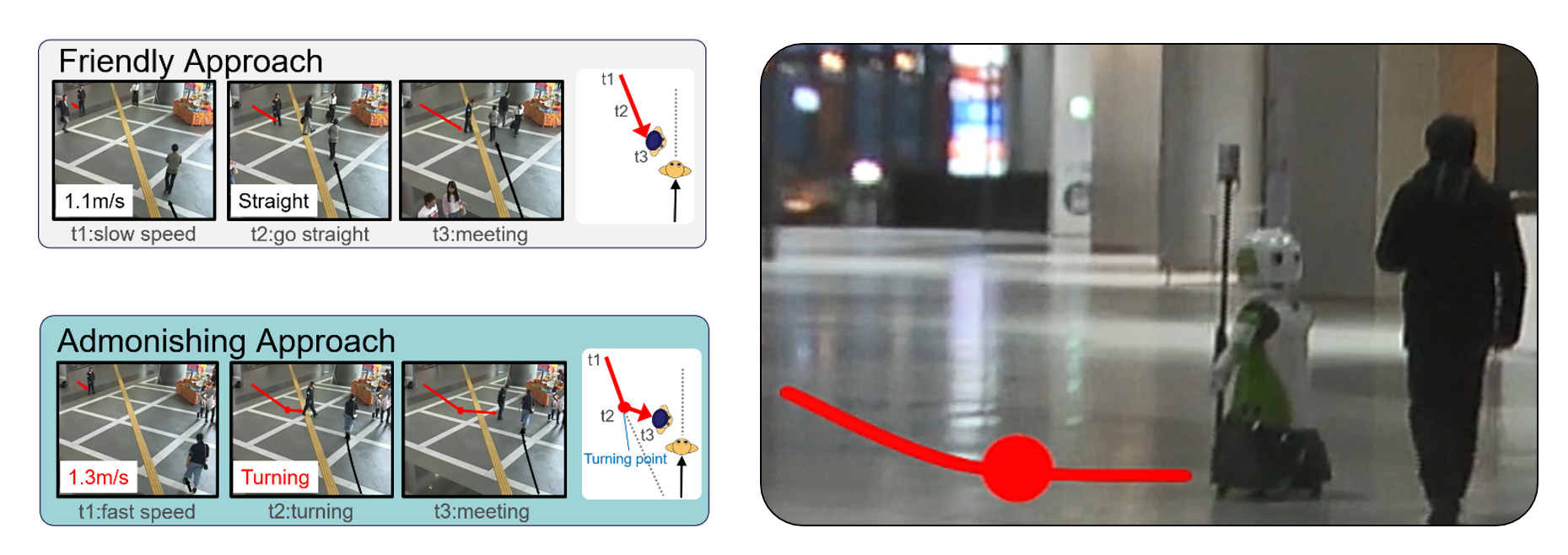

Stop doing it! Approaching Strategy for a Robot to Admonish Pedestrians

Kazuki Mizumaru, Satoru Satake, Takayuki Kanda, Tetsuo Ono

2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI)

We modeled a robot's approaching behavior for giving admonishment. We started by analyzing human behaviors. We conducted a data collection in which a guard approached others in two ways: 1) for admonishment, and 2) for a friendly purpose. We analyzed the difference between the admonishing approach and the friendly approach. The approaching trajectories in the two approaching types are similar; nevertheless, there are two subtle differences. First, the admonishing approach is slightly faster (1.3 m/sec) than the friendly approach (1.1 m/sec). Second, at the end of the approach, there is a `shortcut' in the trajectory. We implemented this model of the admonishing approach into a robot. Finally, we conducted a field experiment to verify the effectiveness of the model. A robot is used to admonish people who were using a smartphone while walking. The result shows that significantly more people yield to admonishment from a robot using the proposed method than from a robot using the friendly approach method.

A Subtle Effect of Inducing Positive Words by Playing Web-Based Word Chain Game

Naoki Osaka, Kazuki Mizumaru, Daisuke Sakamoto, and Tetsuo Ono.

9th International Conference on Human-Agent Interaction (HAI '21).

A variety of chatbots were developed to elicit people’s emotional responses and manage the emotions of people. These chatbots often use text-based emotional sentences. However, there are few studies that chatbots use extremely simplified communications to induce some emotional responses. We investigated the effect of mood contagion and tried to induce positive or negative words from people on our web-based ”word chain game” system as the most simplified conversational style. We also defined the system’s emotion as making the system use words that have polarity, which means each word’s strength of positive or negative emotion. The result shows that users of the system using positive words were slightly influenced to use more positive words than the neutral or negative system.

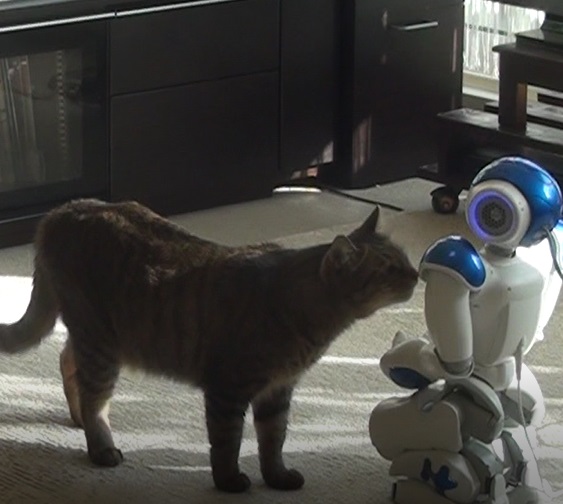

A Social Robot in a Human-Animal Relationship at Home: A Field Study

Haruka Kasuga, Daisuke Sakamoto, Nagisa Munekata, and Tetsuo Ono.

5th International Conference on Human Agent Interaction (HAI '17)

Pets are humanity’s oldest friend since ancient times. People have been living with them since then, and the relationship between people and pets as family members at home is well researched. Recently, social robots are entering family lives, and a new research field is being born, namely, a triad relationship between people, pets, and social robots. To investigate how a social robot affects a human-animal relationship in the home, an exploratory field experiment was conducted. In this experiment, a robot, called NAO, was introduced into the homes of 10 families, and 22 participants (with 12 pets: 4 dogs and 8 cats), called “owners” hereafter, were asked to interact with NAO. NAO was operated under two conditions: speaking positively to the pets, and speaking negatively to them. Just five sentenses that NAO spoke to the pets and two sentenses to the owners were different. The results of the study show that changing NAO’s attitude to the pets affected both the owners’ impression of the robot and the pet’s impression of the robot (perceived by the owners).

Interaction Design

User Interface

SCAN: Indoor Navigation Interface on a User-Scanned Indoor Map

Kenji Suzuki, Daisuke Sakamoto, Sakiko Nishi, and Tetsuo Ono

21st International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '19)

We present an indoor navigation system, SCAN, which displays the user's current location on a user-scanned indoor map. Smartphones use the global positioning system (GPS) to determine their position on the earth, but it does not work in interior environments. SCAN uses indoor map images scanned by a smartphone camera and displays the user's position on the indoor map while they move around a floor. It tracks the user's position using an inertial measurement unit (IMU) on the smartphone. Camera calibration is required for precise navigation, but our system estimates the user's position based on a single landscape image. Results of our preliminary user study suggest that participants' experiences were similar to using outdoor GPS navigation systems.

Pressure-sensitive zooming-out interfaces for one-handed mobile interaction

Kenji Suzuki, Ryuuki Sakamoto, Daisuke Sakamoto, and Tetsuo Ono

20th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '18)

We present new alternative interfaces for zooming out on a mobile device: Bounce Back and Force Zoom. These interfaces are designed to be used with a single hand. They use a pressure-sensitive multitouch technology in which the pressure itself is used to zoom. Bounce Back senses the intensity of pressure while the user is pressing down on the display. When the user releases his or her finger, the view is bounced back to zoom out. Force Zoom also senses the intensity of pressure, and the zoom level is associated with this intensity. When the user presses down on the display, the view is scaled back according to the intensity of the pressure. We conducted a user study to investigate the efficiency and usability of our interfaces by comparing with previous pressure-sensitive zooming interface and Google Maps zooming interface as a baseline. Results showed that Bounce Back and Force Zoom was evaluated as significantly superior to that of previous research; number of operations was significantly lower than default mobile Google Maps interface and previous research.

バーチャルリアリティ / Virtual Reality (VR)

オーグメンテッドリアリティ / Augmented Reality (AR)

ミクストリアリティ / Mixed Reality (MR)

Kuiper Belt: Utilizing the “Out-of-natural Angle” Region in the Eye-gaze Interaction for Virtual Reality

Myungguen Choi, Daisuke Sakamoto, and Tetsuo Ono.

CHI Conference on Human Factors in Computing Systems (CHI '22)

The maximum physical range of horizontal human eye movement is approximately 45°. However, in a natural gaze shift, the difference in the direction of the gaze relative to the frontal direction of the head rarely exceeds 25°. We name this region of 25° − 45° the “Kuiper Belt” in the eye-gaze interaction. We try to utilize this region to solve the Midas touch problem to enable a search task while reducing false input in the Virtual Reality environment. In this work, we conduct two studies to figure out the design principle of how we place menu items in the Kuiper Belt as an “out-of-natural angle” region of the eye-gaze movement, and determine the effectiveness and workload of the Kuiper Belt-based method. The results indicate that the Kuiper Belt-based method facilitated the visual search task while reducing false input. Finally, we present example applications utilizing the findings of these studies.

Interactive 360-Degree Glasses-Free Tabletop 3D Display

Motohiro Makiguchi, Daisuke Sakamoto, Hideaki Takada, Kengo Honda, and Tetsuo Ono.

32nd Annual ACM Symposium on User Interface Software and Technology (UIST '19)

We present an interactive 360-degree tabletop display system for collaborative work around a round table. Users are able to see 3D objects on the tabletop display anywhere around the table without 3D glasses. The system uses a visual perceptual mechanism for smooth motion parallax in the horizontal direction with fewer projectors than previous works. A 360-degree camera mounted above the table and image recognition software detects users' positions around the table and the heights of their faces (eyes) as they move around the table in real-time. Those mechanics help display correct vertical and horizontal direction motion parallax for different users simultaneously. Our system also has a user interaction function with a tablet device that manipulates 3D objects displayed on the table. These functions support collaborative work and communication between users. We implemented a prototype system and demonstrated the collaborative features of the 360-degree tabletop display system.

Embodied Interaction

Multimodal Interface

Bubble Gaze Cursor + Bubble Gaze Lens: Applying Area Cursor Technique to Eye-Gaze Interface

Myungguen Choi, Daisuke Sakamoto, and Tetsuo Ono

ACM Symposium on Eye Tracking Research and Applications (ETRA '20)

We conducted two studies exploring how an area cursor technique can improve the eye-gaze interface. We first examined the bubble cursor technique. We developed an eye-gaze-based cursor called the bubble gaze cursor and compared it to a standard eye-gaze interface and a bubble cursor with a mouse. The results revealed that the bubble gaze cursor interface was faster than the standard point cursor-based eye-gaze interface. In addition, the usability and mental workload were significantly better than those of the standard interface. Next, we extended the bubble gaze cursor technique and developed a bubble gaze lens. The results indicated that the bubble gaze lens technique was faster than the bubble gaze cursor method and the error rate was reduced by 54.0%. The usability and mental workload were also considerably better than those of the bubble gaze cursor.

Designing Hand Gesture Sequence Recognition Technique for Input While Grasping an Object

Sho Mitarai, Nagisa Munekata, Daisuke Sakamoto, Tetsuo Ono

Transactions of the Virtual Reality Society of Japan 26 (4)

In this paper, we propose a hand gesture sequence recognition technique for human–computer interactions wherein a user grasps an object. Our aim is to develop a technique which can recognize input in the form of various grasp types by recognizing their gesture sequence. We introduce a prototype using electromyography signals and report the results of a user study to validate the proposed technique for interactions in hands-busy situations and evaluate the recognition accuracy of the user gestures. The results demonstrate that our proposed technique showed 93.13% average accuracy for basic grasp types according to a classic taxonomy of grasping. We also discuss the findings of the user study and perspectives for the practical application of the proposed technique.

MyoTilt: a target selection method for smartwatches using the tilting operation and electromyography

Hiroki Kurosawa, Daisuke Sakamoto, and Tetsuo Ono

20th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '18)

We present the MyoTilt target selection method for smartwatches, which employs a combination of a tilt operation and electromyography (EMG). First, a user tilts his/her arm to indicate the direction of cursor movement on the smartwatch; then s/he applies forces on the arm. EMG senses the force and moves the cursor to the direction where the user is tilting his/her arm to manipulate the cursor. In this way, the user can simply manipulate the cursor on the smartwatch with minimal effort, by tiling the arm and applying force to it. We conducted an experiment to investigate its performance and to understand its usability. Result showed that participants selected small targets with an accuracy greater than 93.89%. In addition, performance significantly improved compared to previous tilting operation methods. Likewise, its accuracy was stable as targets became smaller, indicating that the method is unaffected by the "fat finger problem".